6.6 KiB

Third-party services

Keycloak

We use Keycloak for the user management because it is a comfortable and multifunctional system. All passwords are stored in a secure database, making it impossible to retrieve them.

For

usernamewe use user's phone number.

How do we store passwords?

Our system has no passwords, instead we send an SMS each time a user logs in, where he receives a 6-digit one-time password (OTP). This OTP expires after OTP_EXPIRATION_TIME milliseconds. The user will not be able to use this OTP until NEXT_TRY_TIME milliseconds expires. Both OTP_EXPIRATION_TIME and NEXT_TRY_TIME default to 60000.

Inside Keycloak in account attributes we have timers:

next_try_timestamp- the time stamp of the next attempt, which will be possible, according to the server timeotp_expiration_timestamp- the time stamp when otp will be expired, according to the server time

In the development version, we use the

111111mock for OTPs of any account.

When user encrypt the OTP with an init vector (IV) and a security key.

- We generate random 6-digit token (OTP) and send in to the user with SMS.

- We get the security key from the environment variables.

- We generate a random init vector, add OTP and salt from code, and store it as the password of the Keycloak account.

- We store the result of encryption in the

session_dataattribute.

When the user logs in (enters his OTP), we use session_data as a raw data and OTP + salt as a init vector.

This means that the data to be accessed is stored in several parts:

- Kubernetes secrets or enviromental variables (security key).

- Keycloak (as a password and as an attribute).

- Code constants (this is where we store the salt).

Without all this data, a hacker will not be able to gain access to someone else's account. Moreover, no one but the user himself can see the OTP sent to him.

Roles

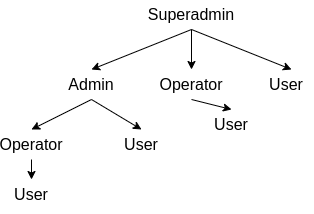

We store information about roles (superadmin, admin, user) inside Keycloak. Superadmin has full control over the system. Admin can handle only those objects he is responsible for. There are different privileges for administrators and users. User is just a user of the Dipal app. Only superadmin and admins have access to the admin panel (Zoo).

HTTPS required

If you try to connect to Keycloak on a server by external IP, it will throw HTTPS required error. To fix it go to a Docker container at first. If you use Docker Compose, cd to yml directory and:

sudo docker-compose exec keycloak sh

If you are using Docker:

sudo docker exec <ID> sh

After you enter the container, execute some instructions:

cd /opt/jboss/keycloak/bin

./kcadm.sh config credentials --server http://localhost:8080/auth --realm master --user admin

./kcadm.sh update realms/master -s sslRequired=NONE

"I accidentally deleted an admin account"

If you deleted an admin account, go to the container and execute this from the bin directory:

./add-user-keycloak.sh --server http://ip_address_of_the_server:8080/admin --realm master --user admin --password adminPassword

Firebase

Firebase is a set of hosting services for any type of application. We use the messaging system from it to send data from Dipal to the user, such as push notifications. We set up a websocket connection between Dipal and Firebase servers and between Dipal and Firebase clients.

Integration with Dipal

To connect Firebase to Pigeons, we've created an app inside Firebase settings and saved google-services.json. This information must be set in the Pigeon .env file. The frontend part should send a request for a registration token from Firebase. It then sends the received registration token to Kaiser, and the backend part stores it in the user's Keycloak attribute of the registration_token. When the system needs to send a notification to this user, it will take the registration token from Keycloak and send the data to the Firebase server.

vapidKey is not a token. The token looks like that:

eUyCZGz_QMKZIzI7QOAq-U:APA91bGTymTn4doKJNl_aBVVqHKXr9y2JyvLjlhzJosPJUYsXBHCEbZL3A1rEpVUZOuvN8UQvlO1_A2bx-ha8lVdHUJ0RDVpyQ3BTxoY6qO2-QcK1ySxAmn5_NXgsOEEjyywh_eyuiZG

Every notification has data and push fields. If the notification doesn't have push notification (e.g. intercom call) , it doesn't send push field.

Notification example:

{

"data": {

"type": "user_place",

"action": "request_confirmed",

"member_name": "Кирилл Некрасов",

"place_name": "flat 7"

},

"push": {

"title": "Place request was accepted!",

"body": "Кирилл Некрасов confirmed your request to flat 7!"

}

}

Apache Kafka

Apache Kafka guarantees message delivery and is adapted to microservice architecture.

Rules for sending messages

Early we used the following format for Kakfa topics: from_<microservice>_to_<miscoservice>_to_<action>_v1_0_0, e.g from_zoo_to_crow_to_delete_settings_v1_0_0. As you can see, source and destination services are defined statically.

Now we use another rule for the Kafka topics: <microservice>.<module>.<function>, e.g crow.settings.delete. Now it doesn't matter who the message came from.

Redis

Redis (from the remote dictionary server) - the resident database management system class NoSQL with open source, working with data structures such as "key - value".

Billing systems

On the future our system is supposed to use various billing systems, but now it only supports Comfortel (UUT-Telecom) billing system.

Every account in our billing system has an account number. This account number is specified in the contract with UUT-Telecom. To establish a connection to the billing system server, your server's external IP address must be on the list of allowed billing system IP addresses. At first you need to take an access token for the billing system. The test account is 9999999 with the password password. After that you can get a list of information about the user.

We save the account_number in user collection of our database.

Filebeat

MongoDB

MongoDB is a non-relational document database that provides support for JSON-like storage. The data is stored inside documents with a similar data structure, which themselves are stored in different collections. One collection is for one type of records.

To make a private key for a document, you can create an index for it.

You can use MongoDB Compass to work with our databases.